Matrices are fundamental objects in linear algebra and have numerous applications in various fields, including mathematics, physics, computer science, and engineering. Understanding the properties and invariants of matrices is crucial for solving problems and analyzing systems. In this blog post, we will explore three key invariants of matrices: the determinant, eigenvalues, and rank.

The Determinant: A Measure of Matrix Transformations

The determinant of a square matrix is a scalar value that provides valuable information about the properties of the matrix. It is denoted by |A| or det(A) and is calculated using a specific formula based on the matrix's size and elements.

The determinant has several important properties and applications:

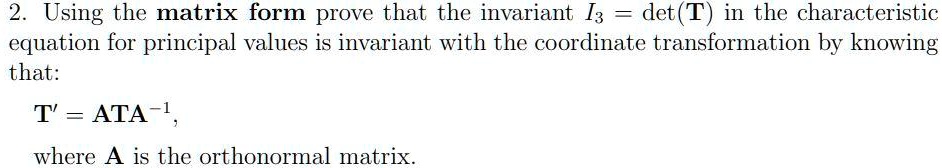

- Unique Value: The determinant is a unique scalar associated with each square matrix. It remains the same even if the matrix is transformed by elementary row operations.

- Multiplicative Property: The determinant of the product of two square matrices is equal to the product of their individual determinants. This property is useful for solving systems of linear equations.

- Invertibility: A square matrix is invertible if and only if its determinant is non-zero. Invertible matrices have important applications in linear transformations and matrix operations.

- Volume Scaling: The determinant represents the scaling factor of the volume or area when a matrix is used to transform a geometric object. A positive determinant indicates a direct transformation, while a negative determinant suggests a reflection.

Calculating the determinant involves expanding the matrix along a row or column and applying specific rules. For a 2x2 matrix, the determinant is the product of the diagonal elements minus the product of the off-diagonal elements. For larger matrices, more complex formulas or cofactor expansion methods are used.

The determinant is a powerful tool for analyzing matrix transformations and solving systems of linear equations. It provides insights into the behavior and properties of matrices, making it an essential concept in linear algebra.

Eigenvalues: Unveiling the Matrix's Characteristic Values

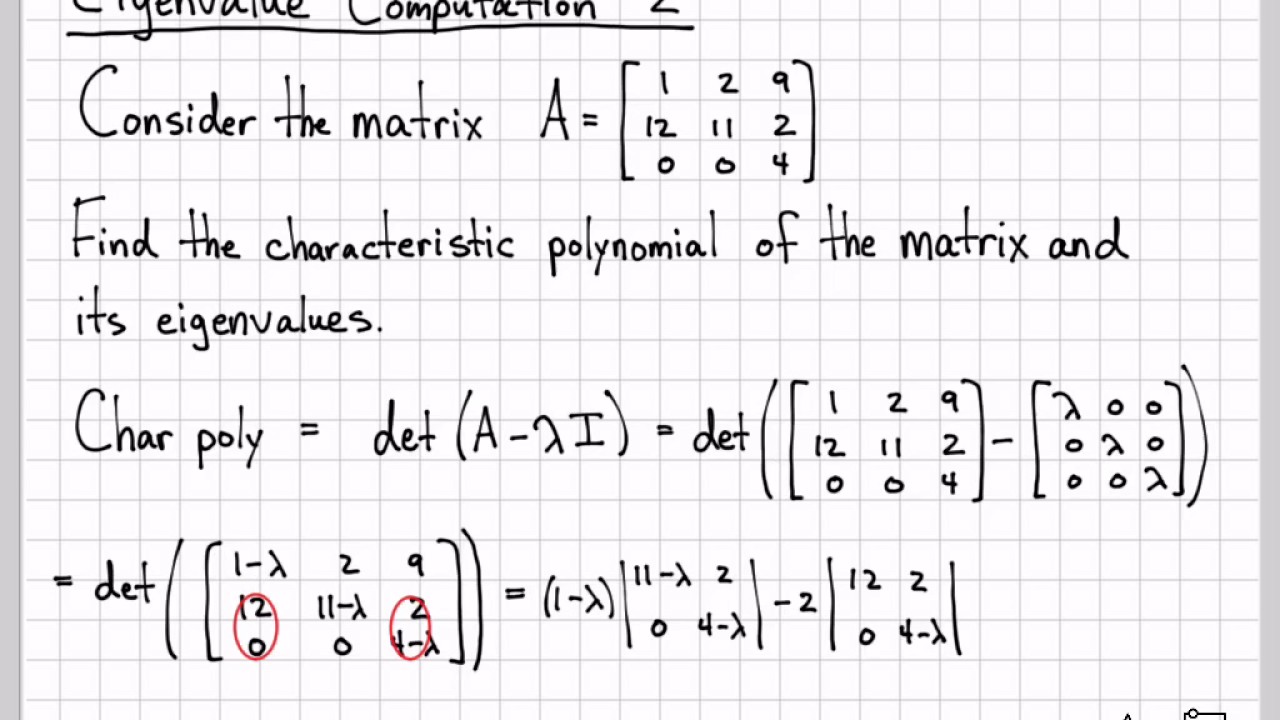

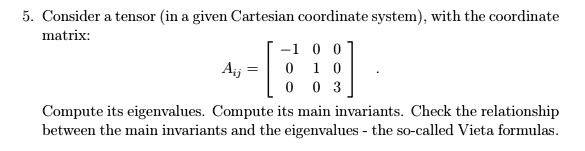

Eigenvalues are scalar values associated with a square matrix that provide information about its structure and behavior. They are the roots of the characteristic equation of the matrix, which is obtained by setting the characteristic polynomial equal to zero.

Eigenvalues have several significant properties and applications:

- Characteristic Equation: The characteristic equation is given by |A - λI| = 0, where A is the matrix, λ is the eigenvalue, and I is the identity matrix. Solving this equation yields the eigenvalues of the matrix.

- Eigenvectors: Eigenvalues are accompanied by corresponding eigenvectors, which are non-zero vectors that, when multiplied by the matrix, result in a scalar multiple of themselves. Eigenvectors represent the directions of transformation for which the matrix acts as a scalar.

- Diagonalization: A square matrix can be diagonalized if it has a complete set of eigenvectors. Diagonalization simplifies matrix operations and allows for easier analysis of matrix powers and exponential functions.

- Spectral Theorem: The spectral theorem states that a symmetric matrix has real eigenvalues and orthogonal eigenvectors. This theorem has important implications in various fields, including quantum mechanics and statistics.

Calculating eigenvalues involves finding the roots of the characteristic equation. For simple matrices, this can be done by inspection or using basic algebraic methods. For more complex matrices, numerical methods or software tools are employed.

Eigenvalues and eigenvectors provide a deeper understanding of matrix transformations and their impact on vectors. They have applications in various areas, including signal processing, image compression, and solving differential equations.

Rank: Determining the Dimensionality of a Matrix

The rank of a matrix is a measure of its dimensionality and provides insights into its linear independence and structural properties. It represents the maximum number of linearly independent rows or columns in the matrix.

The rank has several important characteristics and applications:

- Definition: The rank of a matrix is the number of linearly independent rows or columns. It is denoted by rank(A) or r(A).

- Row and Column Spaces: The rank of a matrix is equal to the dimension of its row space and column space. These spaces represent the span of the rows and columns of the matrix, respectively.

- Full Rank: A matrix is said to have full rank if its rank is equal to the minimum of its number of rows and columns. Full-rank matrices have important properties, such as invertibility and the ability to solve consistent linear systems.

- Null Space: The rank of a matrix is related to the dimension of its null space, which is the set of all solutions to the homogeneous system of linear equations represented by the matrix.

Calculating the rank of a matrix involves performing row operations and identifying the number of linearly independent rows or columns. Gaussian elimination or the singular value decomposition (SVD) method can be used to determine the rank.

The rank is a fundamental concept in linear algebra and has numerous applications. It is used in solving linear systems, analyzing the behavior of linear transformations, and understanding the structure of matrices.

Conclusion

In this blog post, we explored three invariants of matrices: the determinant, eigenvalues, and rank. Each of these invariants provides valuable insights into the properties and behavior of matrices. The determinant measures the transformation properties, eigenvalues reveal the characteristic values and directions of transformation, and the rank determines the dimensionality and linear independence of the matrix.

Understanding these invariants is crucial for solving complex problems in linear algebra and applying matrix theory to various fields. By analyzing these invariants, we can gain a deeper understanding of matrix transformations, solve systems of linear equations, and make informed decisions in a wide range of applications.

FAQ

What is the determinant of a matrix used for?

+

The determinant is used to determine the invertibility of a matrix, solve systems of linear equations, and analyze volume scaling in geometric transformations.

How are eigenvalues and eigenvectors related to matrix transformations?

+

Eigenvalues and eigenvectors represent the characteristic values and directions of transformation for a matrix. They provide insights into how the matrix transforms vectors.

What is the significance of the rank of a matrix?

+

The rank of a matrix indicates its dimensionality and linear independence. It helps in solving linear systems, understanding matrix transformations, and analyzing the structure of matrices.